SQL Patterns (and how to exploit them) Part I

This blog is part one of our series, SQL Patterns (and how to exploit them). In this series, we will explore increasingly complex patterns that commonly occur in SQL and how Single Origin can exploit those patterns to consolidate and reuse SQL queries - saving users time and money. Check out our website if you want to learn more about Single Origin.

Today, it is impossible for data organizations to scale without sacrificing simplicity. The complexity of queries, pipelines, and infrastructure tends to grow exponentially. Meanwhile, the ability to detect duplicate work and reduce redundancies fades away. Additionally, metrics and definitions used by data and business operators can become difficult to discern and share.

Single Origin brings simplicity to the chaos through its semantic management platform. Semantic management as a term may seem broad, so in today’s article, we’ll focus on its potential impact on two data operators in the field. Each will be given a task and will write a query. Their queries contain a commonplace pattern that Single Origin can detect, and armed with this knowledge, our data operators will be able to reduce the complexity of their operations and improve the reusability of their SQL.

The Problem

Meet Jan and Michael - they work on the analytics team at Big Datum Incorporated. It’s Jan’s first day as a Data Analyst on the Promotions team, and she’s been given her first assignment. Jan’s boss wants her to build a dashboard that computes the per-customer coupon amount and net profit for:

- all out of town customers

- buying from stores in 5 cities

- over the weekend

- across three consecutive years.

Michael, a seasoned professional as a Senior Data Analyst on the Sales team, has a recurring task to generate an analysis report that is similar but distinct. He must compute the per-customer extended sales price, extended list price, and extended tax for the same segment of customers.

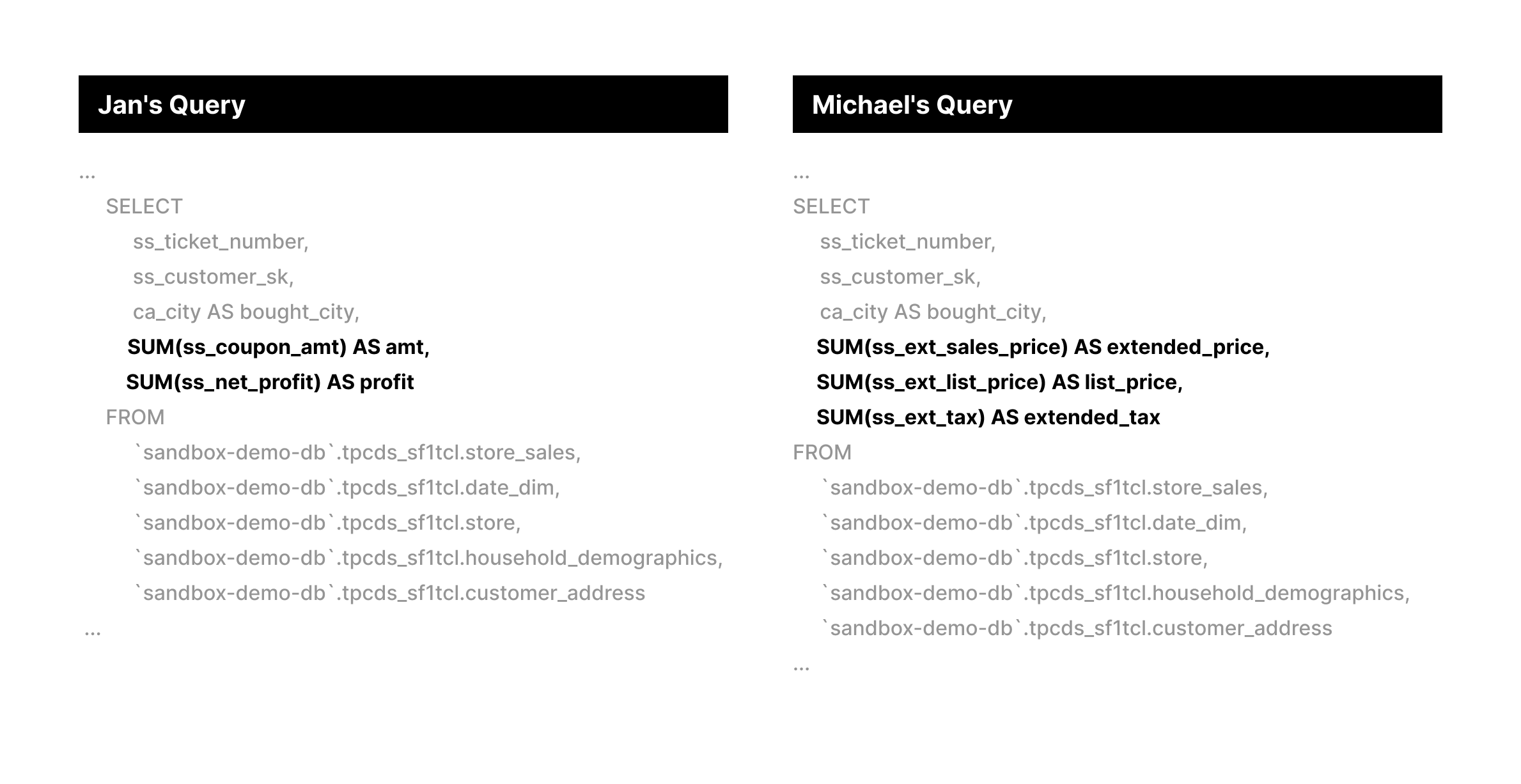

Working independently, Jan and Michael come up with the following two queries:

Jan's Query

SELECT

c_last_name,

c_first_name,

ca_city,

bought_city,

ss_ticket_number,

amt,

profit

FROM (

SELECT

ss_ticket_number,

ss_customer_sk,

ca_city AS bought_city,

SUM(ss_coupon_amt) AS amt,

SUM(ss_net_profit) AS profit

FROM

`sandbox-demo-db`.tpcds_sf1tcl.store_sales,

`sandbox-demo-db`.tpcds_sf1tcl.date_dim,

`sandbox-demo-db`.tpcds_sf1tcl.store,

`sandbox-demo-db`.tpcds_sf1tcl.household_demographics,

`sandbox-demo-db`.tpcds_sf1tcl.customer_address

WHERE

store_sales.ss_sold_date_sk = date_dim.d_date_sk

AND store_sales.ss_store_sk = store.s_store_sk

AND store_sales.ss_hdemo_sk = household_demographics.hd_demo_sk

AND store_sales.ss_addr_sk = customer_address.ca_address_sk

AND ( household_demographics.hd_dep_count = 6

OR household_demographics.hd_vehicle_count = 0 )

AND date_dim.d_dow IN (6,

0)

AND date_dim.d_year IN (2000,

2000 + 1,

2000 + 2)

AND store.s_city IN ( 'Midway',

'Fairview',

'Fairview',

'Fairview',

'Fairview' )

GROUP BY

ss_ticket_number,

ss_customer_sk,

ss_addr_sk,

ca_city ) AS dn,

`sandbox-demo-db`.tpcds_sf1tcl.customer,

`sandbox-demo-db`.tpcds_sf1tcl.customer_address AS current_addr

WHERE

ss_customer_sk = c_customer_sk

AND customer.c_current_addr_sk = current_addr.ca_address_sk

AND current_addr.ca_city <> bought_city

ORDER BY

c_last_name,

c_first_name,

ca_city,

bought_city,

ss_ticket_number

LIMIT

100

Michael's Query

SELECT

c_last_name,

c_first_name,

ca_city,

bought_city,

ss_ticket_number,

extended_price,

list_price,

extended_tax

FROM

(

SELECT

ss_ticket_number,

ss_customer_sk,

ca_city AS bought_city,

SUM(ss_ext_sales_price) AS extended_price,

SUM(ss_ext_list_price) AS list_price,

SUM(ss_ext_tax) AS extended_tax

FROM

`sandbox-demo-db`.tpcds_sf1tcl.store_sales,

`sandbox-demo-db`.tpcds_sf1tcl.date_dim,

`sandbox-demo-db`.tpcds_sf1tcl.store,

`sandbox-demo-db`.tpcds_sf1tcl.household_demographics,

`sandbox-demo-db`.tpcds_sf1tcl.customer_address

WHERE

store_sales.ss_sold_date_sk = date_dim.d_date_sk

AND store_sales.ss_store_sk = store.s_store_sk

AND store_sales.ss_hdemo_sk = household_demographics.hd_demo_sk

AND store_sales.ss_addr_sk = customer_address.ca_address_sk

AND (

household_demographics.hd_dep_count = 6

OR household_demographics.hd_vehicle_count = 0

)

AND date_dim.d_dow IN (6, 0)

AND date_dim.d_year IN (2000, 2000 + 1, 2000 + 2)

AND store.s_city IN (

'Midway',

'Fairview',

'Fairview',

'Fairview',

'Fairview'

)

GROUP BY

ss_ticket_number,

ss_customer_sk,

ss_addr_sk,

ca_city

) AS dn,

`sandbox-demo-db`.tpcds_sf1tcl.customer,

`sandbox-demo-db`.tpcds_sf1tcl.customer_address AS current_addr

WHERE

ss_customer_sk = c_customer_sk

AND customer.c_current_addr_sk = current_addr.ca_address_sk

AND current_addr.ca_city <> bought_city

ORDER BY

c_last_name,

c_first_name,

ca_city,

bought_city,

ss_ticket_number

LIMIT

100

Jan and Michael’s queries may look familiar: they are from the tpc-ds, a well-known benchmark for big data analysis.

The Pattern

On inspection, you can see that the above queries share the same FROM, WHERE, GROUP BY, and ORDER BY clauses. Where they differ is what they are selecting. This pattern, where there is a difference in the selection, is commonly found in organizations writing lots of SQL.

SQL is a flexible language with no fixed style guide. So while the above example may be easy to notice, Michael could write an equivalent SQL statement with differing syntax. For example, Michael’s 62-line query without CTEs could be re-written as a 91-line query with CTEs. This would make detecting similarities more difficult for human reviewers.

Michael's Query with CTE

WITH

date_dim AS (

SELECT

*

FROM

`sandbox-demo-db`.tpcds_sf1tcl.date_dim

WHERE

d_dow IN (6,

0)

AND d_year IN (2000,

2000 + 1,

2000 + 2) ),

storeAS (

SELECT

*

FROM

`sandbox-demo-db`.tpcds_sf1tcl.store

WHERE

s_city IN ( 'Midway',

'Fairview',

'Fairview',

'Fairview',

'Fairview' ) ),

household_demographics AS (

SELECT

*

FROM

`sandbox-demo-db`.tpcds_sf1tcl.household_demographics

WHERE

hd_dep_count = 6

AND hd_vehicle_count = 0 ),

aggregations AS (

SELECT

ss_ticket_number,

ss_customer_sk,

ca_city AS bought_city,

SUM(ss_ext_sales_price) AS extended_price,

SUM(ss_ext_list_price) AS list_price,

SUM(ss_ext_tax) AS extended_tax

FROM

`sandbox-demo-db`.tpcds_sf1tcl.store_sales

JOIN

date_dim

ON

store_sales.ss_sold_date_sk = date_dim.d_date_sk

JOIN

store

ON

store_sales.ss_store_sk = store.s_store_sk

JOIN

household_demographics

ON

store_sales.ss_hdemo_sk = household_demographics.hd_demo_sk

JOIN

`sandbox-demo-db`.tpcds_sf1tcl.customer_address

ON

store_sales.ss_addr_sk = customer_address.ca_address_sk

GROUP BY

ss_ticket_number,

ss_customer_sk,

ss_addr_sk,

ca_city )

SELECT

c_last_name,

c_first_name,

ca_city,

bought_city,

ss_ticket_number,

extended_price,

list_price,

extended_tax

FROM

aggregations

JOIN

`sandbox-demo-db`.tpcds_sf1tcl.customer

ON

aggregations.ss_customer_sk = customer.c_customer_sk

JOIN

`sandbox-demo-db`.tpcds_sf1tcl.customer_address AS current_addr

ON

customer.c_current_addr_sk = current_addr.ca_address_sk

WHERE

current_addr.ca_city <> bought_city

ORDER BY

c_last_name,

c_first_name,

ca_city,

bought_city,

ss_ticket_number

LIMIT

100

Ultimately, the two queries are similar and, crucially, will generate duplicative work. Despite this, Jan and Michael fail to recognize the overlap. They don't review each other's SQL queries - they're on different teams after all. Even if they did, would they realize how to benefit from the similarities? Unfortunately, the two analysts write their queries in isolation. Their queries live on, perhaps used in an ETL pipeline or periodical query job. Jan and Michael never leverage the fact that they can utilize the same logic, and Big Datum is left to foot the bill.

That's where Single Origin comes in.

The Solution

Single Origin connects to your data warehouse and ingests your queries. Queries are then automatically decomposed, unlocking the following benefits:

- Pattern detection: Single Origin can detect patterns in queries across users, even when the query structure is completely different. This information is used to prevent duplicate logic.

- Reusability: Each piece of the query is defined and discoverable. This allows users to utilize components of prior queries. Single Origin can even re-construct one query from the component pieces of multiple queries, allowing for extensibility.

Let's take another look at Jan and Michael's workflows, but they'll use Single Origin for duplication detection and SQL reusability this time.

Michael logs into Single Origin and imports his initial query. The query is cataloged, so when Jan imports her query, she is alerted that duplication is present. She can then reuse Michael's definition and build on top of it.

In this scenario, Single Origin can help Jan and Michael consolidate their two query jobs into one job, saving on compute and maintenance costs.

Notice that Jan and Michael have gone from managing individual queries to managing shared definitions. This paradigm shift enables Jan and Michael to collaborate more easily, move faster, and build more efficient queries. Big Datum Inc is one step closer to achieving simplicity.

There are more patterns Single Origin can detect and more ways to reuse their SQL. Next week, we'll look at a more complex pattern. In our final post of the series, we will explore the ways Single Origin will leverage this technology in the future to deliver high-impact solutions for data operators and organizations at scale. So stay tuned!

For more articles, follow us on LinkedIn and subscribe to our blog! If you’re interested in learning more about the Single Origin platform, check out our website. For deep dives into how Single Origin’s SQL processing engine works, check out this series.

Until next time!